Fuzz Testing YottaDB

K.S. Bhaskar

Summary

Every day, we find fault with our software, so that you don’t!

Robustness in software is a mark of quality that’s often easy to lose in development.

Thanks to Zachary Minneker of Security Innovation, Inc., we are implementing fuzz testing to make our software even more robust. Fuzz testing provides us with one more way to generate test cases to test that the software does not do what it is not supposed to do. As expected from a new form of testing, we have discovered bugs that we did not know existed, and which no user has reported to us.

Fuzzing

The word “fuzz” is hundreds of years old. More recently, it has been used in music for deliberate distortion, typically guitar sounds. Fuzzing as a test method is relatively recent. At its most basic, fuzzing involves running a piece of software over and over with generated inputs, usually invalid, while attempting to exercise as much code as possible. As fuzzing has developed and the state of the art has changed, fuzzing techniques have grown more elaborate and now involve complex tooling and techniques.

The word “fuzz” is hundreds of years old. More recently, it has been used in music for deliberate distortion, typically guitar sounds. Fuzzing as a test method is relatively recent. At its most basic, fuzzing involves running a piece of software over and over with generated inputs, usually invalid, while attempting to exercise as much code as possible. As fuzzing has developed and the state of the art has changed, fuzzing techniques have grown more elaborate and now involve complex tooling and techniques.

Rejection of invalid input by the software, by discarding it, rejecting it, or reporting it, are all appropriate behaviors, depending on the software specification. Behavior such as process termination (unless that is the specified behavior for invalid input), inconsistent internal state (such as overwriting memory), or worse yet, undesirable behavior are bugs to be fixed.

Fuzzing YottaDB

Zachary Minneker from Security Innovation tested YottaDB by feeding the compiler manipulated M code. While it is certainly possible to input randomly generated bytes, most of the input would be rejected by the parser, and the fuzzing would not be efficient, even though it would theoretically eventually expose every issue with the software (see the Infinite Monkey Theorem). To fuzz efficiently, Zachary started with a corpus of M source code from the YottaDB automated test suite, and made some minor changes to YottaDB to make unexpected behavior more easily detected. Then a 24-core machine running the prepared fuzzing harness and corpus fuzzed YottaDB for a number of days, over the course of several months.

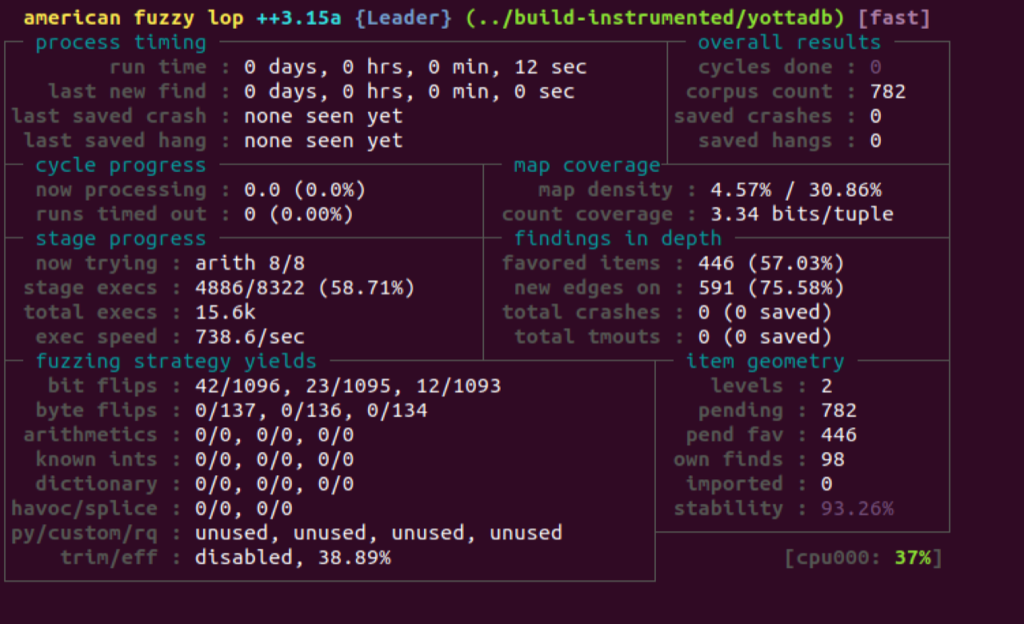

He used AFL++ to generate fuzzed input to YottaDB. AFL++ works by using special tracing instrumentation which marks conditional paths through YottaDB. During execution, the exact path taken through the code is found by noting which conditional paths are taken using a mutated input. If an input causes new paths to be taken it is added to the corpus for further fuzzing, which then increases the amount of code paths taken.

Since YottaDB has signal handlers for normal operation, as well as to catch signals in order to shut down cleanly, he commented out the signals as they would interfere with the testing. In some cases, he used AddressSanitizer, a tool which makes otherwise difficult to detect memory bugs crash the application.

YottaDB can respond to input M code in different ways:

- Flag a syntax error. Since YottaDB is expected to generate syntax errors for syntactically incorrect code, this input is not very interesting from a testing point of view.

- Run the code. This is also not very interesting from a fuzz testing point of view: even if the code adds 2 and 2 to get 5, that should be caught by normal functional testing.

- A YottaDB built with the address sanitizer reports an address error. This is YottaDB failure to be captured.

- Crash. This is potentially a YottaDB failure, because except in cases such as a process sending itself a signal with $ZSIGPROC(), processes should not terminate abnormally.

- Hang. A hang by itself does not tell the fuzz tester much because the fuzzed code might include a syntactically correct infinite loop. But a hang might indicate a memory bug.

Some bugs may have multiple ways in which they can be triggered. Zachary eliminated duplicates, created minimal inputs for each failure, and performed root cause analysis. After months of work, he identified failures that are captured as CVEs and as a YottaDB Issue against which our development team is creating fixes.

Practical Implications

Failures identified by Zachary’s fuzz testing are potential security issues because:

- A crash is in principle a denial of service.

- An error that overflows a buffer, or overwrites memory is in principle a vector for attack if an attacker is able to load and execute an exploit into memory that is overwritten.

- An error that causes bad object code to be generated is in principle a vector for attack if an attacker can load code to be executed in to the object code.

As a practical matter:

- The failures are caused by code which is syntactically correct, but functionally useless in the context of an application. Any YottaDB deployment is controlled code that has gone through developers and is tested: your YottaDB application certainly would not execute code from the web without validating it.

- Exploiting any of the vulnerabilities requires the ability to modify the M code which your system is executing, which requires login access to the system.

- Except for process crashes, where the crash itself is the exploit, vulnerabilities are chinks in the armor, not exploits. Turning these vulnerabilities into an exploit (which requires M code to be executed) would require an order of magnitude, or more, of effort.

Controlling access to your system, and validating M code executed, are two key layers of defenses against all vulnerabilities.

What Next?

Since security is a journey, not a destination:

- We are accelerating the YottaDB r1.34 release with fixes identified by Zachary’s fuzzing, and expect to release it in mid Q1 2022, rather than later in the year. This means that some other issues targeted for r1.34 will be deferred to r1.36.

- Unlike tests in our automated test suites, where you can run a test and have it pass or fail, fuzz testing is a type of random walk that you run continuously till you find something interesting. We will dedicate hardware to continuous fuzzing. As it finds issues, we will fix them in releases of YottaDB.

- We will start fuzz testing Octo.

In Conclusion

Although perfection does not exist in this universe, you can be assured of our continued commitment to your YottaDB experience being as rock solid, lightning fast, and secure as we can make it.

In closing, we would like to reiterate our thanks to Zachary Minneker and Security Innovation for introducing us to fuzz testing.

Images Used:

[1] Fuzz – Rock en Seine. Photo by Alexandre Pennetier.

[2] Keeley Fuzz Bender pedal. Photo by Skimel.

Screenshots courtesy Zachary Minneker.

Published on January 10, 2022