Edge Computing and YottaDB Everywhere

K.S. Bhaskar

Bottom-Line Up Front – Computing on the Edge is Booming

Inexpensive System-on-a-Chip (SoC) computers are a game changer. With the BCM2835 chip, a Raspberry Pi Zero retails for $5, which means the SoC most likely costs less than $1 in bulk. That chip is roughly comparable to a 300MHz Pentium 2 CPU with the video capabilities of an original Xbox and is just one example of what is available in the market today. SoCs allow significant computing capabilities to be built into smart sensors and devices to enable ubiquitous computing.

Growth in computing driven by Moore’s Law since the 1960s resulted in waves back and forth between the cloud and the edge, with growth in one leading and producing complementary growth in the other. The current wave is on the edge, and still growing.

YottaDB powers computing on the edge too, not just in the cloud.1

Defining Terms

Let me define some terms as I use them:

- Computing is the process of calculating desired outputs from inputs. Inputs and outputs may be representational (such a news report or a paycheck stub), or connected to sensors and actuators (such as a building’s climate control system).

- Real-time computing is the process of calculating desired outputs from input in bounded time. For example, even if the route it recommends is less than optimal, a navigation app must respond in real-time, because there is no value in telling a driver, “Turn right ¼ mile back.”

- Computing on the edge is computing performed at or near the source of inputs and destination of outputs, typically not in a physically secured environment.2 “At or near” means that the time, cost, and robustness of moving data is assured. While a self-driving car can use the cloud to help it navigate, because the worst consequence is a sub-optimal route, edge computing must autonomously recognize and brake for a pedestrian in front.

The First Wave – in the Data Center

Through most of the 1960s, computing was centralized. The first computer I ever programmed, an IBM 1620, had its own room in an air-conditioned computer center.3 “Computing” at the edge was limited to analog computers, such as airplane autopilots. For anything more complex, data moved between where it was generated and consumed (the edge) to where it was computed (the data center) via Sneakernet in the form of punched cards, punched tape, and magnetic tape. Computing was most commonly “batch” processing, where one submitted a “job” on a deck of punched cards, and received the output hours, and occasionally days, later.

The Second Wave – Interactive / Personal Computing

Although the actual computing logic was still in the data center, the input could be produced, and output consumed, interactively on teletypewriters. Dropping costs and improvements in communication and hardware made computing economical outside the data center, in what we today call the edge.

Microprocessors like the Intel 8080 performed computation on the edge, originally in intelligent terminals. The growth of processing power and dropping prices allowed intelligent terminals to evolve into ever more capable personal computers. With the development of office suites, games, encyclopedias, and more, personal computing took off, leading to a boom in computing at the edge.

Change in the edge caused change in data centers, New online services in data centers allowed users in widely separated locations to interact in real time; a few, such as AOL and CompuServe, still exist today.

The Third Wave – from Data Center to Cloud

Pre-Internet online services were well tended, walled gardens organized to navigate within them, but you were out of luck if you needed something outside their guarded walls. In contrast, the Internet was more like a wide-open plain, driven by better, faster, and cheaper communication, organized around open communications protocols such as the Hypertext Transfer Protocol (HTTP) that allowed anyone to participate in the ecosystem. While you could get information you wanted on “the web”, locating it was not always straightforward, leading to the popularity of search engines and browsers.

The growth of the web meant that browsers became ubiquitous, as did web servers that fed them data. From providing roadmaps to information on the web, it was a logical progression to other web-based services such as e-mail. Instead of lugging a PC around with downloaded e-mail, it became so much easier to leave the e-mail with an online service so that you could access it from whatever device you had available. Collaboration became easier and more timely than ever before. I write these words on an online service provider4 rather than on an office suite on my laptop, making it that much easier and faster for my good friends and best critics – my editors – to prepare it for publication.

This also meant that IT departments no longer had to maintain infrastructure for commodity services but could rely on the economies of scale of cloud service providers. The cloud allows information to reside just about anywhere – Gandi, our web hosting provider, serves YottaDB web content to browsers around the world from a data center in Luxembourg.

While PC growth has stalled, and no one buys dedicated GPS units any more, the growth of the cloud was a beneficial catalyst for change at the edge. Technologies converged, and the basic cellphone morphed into a smartphone whose value as a platform for “apps” served by the cloud exceeds its value as a device for telephony.

Today’s Fourth Wave – Life on the Edge, or The Internet of Things

Compared to the IBM 7094 computers that controlled the Apollo lunar missions, a Raspberry Pi Zero has orders of magnitude more processing power, memory, and storage. Coupled with the growth in lightweight, inexpensive, low-power sensors, we are in the middle of an explosion of data generation on the edge.

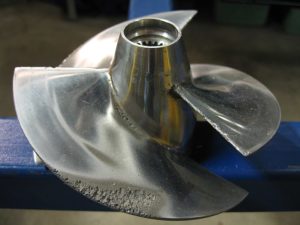

Consider a pump. It should spin fast to maximize the flow of liquid, but not so fast that the flow past the blades is turbulent (causing a loss of pumping efficiency), or that little bubbles form resulting in cavitation damage. With an inexpensive resistance strain gauge on it, connected to inexpensive hardware like a Raspberry Pi Zero, using YottaDB to store the data, one can measure the vibration of the pump. Perform a Fast Fourier Transform (FFT) on the data, and when the pump is operating with laminar flow of the liquid past the blades, the FFT will have spikes at harmonics of the pump’s rotational speed with a certain baseline of noise between the spikes. As the flow past the blades becomes turbulent, that noise floor rises, and when cavitation starts to occur, it rises sharply. The software in the Raspberry Pi can control the speed of the pump to continuously operate it at maximum efficiency. This solution can both be built into new pumps as well as retrofitted to hundred year old pumps.

While such optimization has long been possible, it was economically viable only for large-scale industrial pumps such as those in an oil refinery. Today, they are economically viable for a broad range of applications, such as those one might find in light industry or agriculture. Such real-time performance tuning is widely applicable even for personal projects – for example, to allow a home theater system to adjust the sound to the presence of people (who change a room’s acoustics).

The explosion of data on the edge creates opportunities in the cloud, both for decision support / transaction processing as well as for machine learning. Data on the edge can be acted on in real-time at the edge, and also sent to the cloud for more intensive computation to improve the effectiveness of computing at the edge with better algorithms and heuristics. The following example is borderline fourth wave, because the smartphone is too expensive to be ubiquitous on the edge, but it illustrates the type of “Internet of Things” application stack that inexpensive, pervasive computing and sensors on the edge makes possible, and a use case that we routinely benefit from:

- A navigation app receives time and location information from satellites to compute its location. The map is a graph whose nodes are locations connected by weighted edges. Each edge has weights based on distance, transit time based on speed limit, transit time based on traffic, expected fuel consumption, etc. A graph traversal algorithm which has been instructed by the user on what it should optimize computes navigation instructions. This computation must be performed entirely at the edge to ensure timeliness of the result.

- The navigation app transmits data such as its location, heading, speed, and destination to the cloud. Decision support software in the cloud that receives this information from navigation apps in multiple vehicles calculates actual transit times for each edge, and updates this information to navigation apps that can then dynamically update the navigation instructions they generate. While the navigation apps must execute in real-time, the decision support software executes in near real-time – transit times based on traffic and weather delays are statistical aggregations over short periods of time rather than the real-time instructions from the navigation app to the user.

- If you are driving across a large city, current transit times based on traffic and weather for edges corresponding to locations which you may not pass through for a half hour are less important for calculating routes than estimates of what they are likely to be when you get there. This requires deeper information such as historical traffic patterns, local weather forecast, and knowledge about planned crowd-generating activities such as sporting events. Generating this type of projected information for navigation apps requires cloud-based machine learning to generate algorithms that can then make projections.

A fourth wave application stack based on our pump example above might look like this:

- In addition to the vibration sensor, each pump has an inexpensive input power sensor and output flow meter. The vibration sensor and software in each pump operate that pump at maximum efficiency, and the power sensor and output flow meter generate data that is collected by YottaDB and processed centrally (“in the cloud”). Also, the energy required to start the motor of each pump is easily computed at the edge from the energy consumption of multiple pumping cycles.

- If there is a pumping station with a reservoir of liquid and a set of pumps to pump it to the next stage, software in the pumping station can turn on and cycle pumps as needed, optimizing different parameters as directed, such as energy efficiency, pumping time, pumping deadline, volume required by consumers of the liquid pumped, etc.

- Machine learning can analyze pumping data as well as other information such as energy cost and pump failure data to make decisions to direct pumping operations, for example:

- When the sun shines, operate pumps for maximum pumping efficiency, e.g., because electricity comes from solar panels and has zero marginal cost.

- Otherwise, operate pumps for maximum energy efficiency, e.g., because without solar energy available the pumps must draw on battery power.

- If failure data is available, machine learning and statistical analysis in the cloud can also direct pump operation to minimize the probability of failure till the next maintenance period.

YottaDB Everywhere

On the Edge

YottaDB is very parsimonious of computing resources. The engine itself occupies just 10-12MB of space, even as it can operate databases that are limited only by available storage. Without a database daemon, it lends itself to low-power smart sensors and devices on the edge.

The robustness of YottaDB makes it a good candidate for devices that must operate autonomously. Databases can be configured to recover automatically after a power outage. Database replication means that the data can be streamed in real time to a hub or integration point, and indeed this allows for sensors and devices that have no persistent read-write storage – when such a device powers up, its database is restored from the replica on the hub or integration point.

YottaDB also gives you your choice of programming language. Effective r1.20, with its C API you can program in C, and since C is the lingua franca of computing, from any language with the ability to call C APIs. YottaDB also includes a complete implementation of M (also known as MUMPS), which was used to implement powerful software on computers far less capable than a Raspberry Pi Zero. The choice is yours.

In the Cloud

The following representative example illustrates YottaDB’s ability to handle very large databases. Consider a factory making equipment (such as vehicles) each of which has 100 (1E2) sensors. If the factory makes 100,000 (1E5) units per year, that is 10,000,000 (1E7) streams of data. If each sensor generates on average 1 byte/second during 1,000,000 (1E6) seconds of operation per year, that amounts to 10TB (1E13 bytes) per year, all of which can go into a single YottaDB database. If the factory has 10,000 (1E4) machines, each of which has 100 (1E2) sensors, that data can also go into the same database. With YottaDB everywhere, the data can be moved seamlessly and smoothly, under the direction of the design engineers, between the edge and the cloud. Warranty and service information can also go into the same database,

With statistical and machine learning techniques operating on the database, the manufacturer can generate predictive models of failure that use data streams from sensors at the edge to anticipate failures before they occur and to schedule maintenance before failures occur.

In Closing, Another Example

In addition to the discussion in this blog post, this hypothetical parking use case is an example that is representative of an Internet of Things application stack that spans the levels from sensors on the edge to machine learning in the cloud, discussing how YottaDB can provide data persistence at every level of the computing stack.

[1]. “Cloud” is just a recently invented term for the ability to remotely control computation environments.↩

[2]. Although some physical security can be implemented for edge computing devices, an attacker ultimately has physical access to edge computing devices.↩

[3]. On a personal note, that the computer center was the only air-conditioned building on campus in a city where summer temperatures routinely exceeded 100℉ hooked me onto computing.↩

[4]. A shout out to our provider Zoho, which provides a complete set of services for a small business like ours.↩

Featured Image : At electric driven pump of a water work nearby the Hengstey See, Germany, by Markus Schweisss

Published on March 06, 2018